Local Language Models and security: Separating Fact from Fiction

There is a lot of misconceptions, over dramatic FUD, and zealousy around locally run models. Proponents claim they're inherently more secure; sceptics argue they create new vulnerabilities, a lot miss the mark completely. The truth, as always, is more nuanced. Let's bust some myths and examine what local LLMs actually mean for your security posture.

The Myths We Need to Address

Myth 1: "Local models are inherently more secure"

This is perhaps the most pervasive misconception. Running a model locally does eliminate one specific attack vector - and its a legitmate one - your data no longer travels across networks to third-party servers. That's genuinely valuable. But it doesn't make your setup "more secure" in any absolute sense.

What actually happens is that security responsibility shifts. You're now accountable for securing the model files themselves and their provenance and integrity, the inference server, access controls, dependency management, and ongoing vulnerability patching. For organisations without dedicated security expertise, this can actually increase overall risk.

The reality: Security doesn't disappear - it relocates. Whether that's better or worse depends entirely on your capabilities and threat model.

Myth 2: "Local means completely private"

Only if your entire pipeline is genuinely local. Many "local" setups still phone home for model updates, send telemetry data, or integrate cloud-based components for retrieval-augmented generation or fine-tuning. The privacy benefit depends on your specific configuration, not simply where the model weights reside.

Before assuming privacy, audit your complete stack. Check network traffic. Verify that your "local" solution isn't quietly making external calls.

Myth 3: "Cloud AI providers are reading all your data"

This fear is often overstated. Reputable providers typically implement strong contractual commitments and technical controls around data handling. Enterprise tiers frequently offer zero-retention policies and dedicated infrastructure.

That said, the risk isn't zero—particularly for highly regulated data where even theoretical exposure matters, or where regulatory requirements mandate specific data residency. The question isn't whether cloud providers will misuse your data, but whether your compliance framework permits the data to leave your perimeter at all.

The Genuine Advantages of Local Models

With the myths addressed, let's examine where local deployment genuinely shines:

Data Sovereignty and Regulatory Compliance

For healthcare, finance, legal, and defence sectors, data residency requirements aren't optional. When regulations mandate that sensitive data never leaves your infrastructure, local models aren't just preferable - they may be the only compliant option. GDPR, HIPAA, and sector-specific regulations increasingly scrutinise where AI processing occurs.

Operational Independence

Cloud dependencies create operational risk. API outages, rate limits, sudden policy changes, or provider discontinuation can disrupt critical workflows. With local models, you control availability. This matters enormously for mission-critical applications where downtime has serious consequences.

Complete Auditability

Compliance audits require visibility into your entire stack. Local deployment means you own every component - from model weights to inference code to logging infrastructure. This comprehensive auditability is often impossible with cloud services, where significant portions of the stack remain opaque.

Air-Gapped Deployment

Some environments genuinely cannot connect to the internet. Classified government systems, critical infrastructure, and certain financial trading floors require complete network isolation. Local models make AI capabilities possible in these contexts where cloud solutions simply cannot operate.

Reduced Network Attack Surface

No API keys to leak. No man-in-the-middle risks on inference calls. No authentication tokens to compromise. While local deployment introduces its own attack vectors, eliminating network-based vulnerabilities is a genuine security improvement for many threat models.

The Real Risks You Shouldn't Ignore

A balanced view requires acknowledging where local deployment creates challenges:

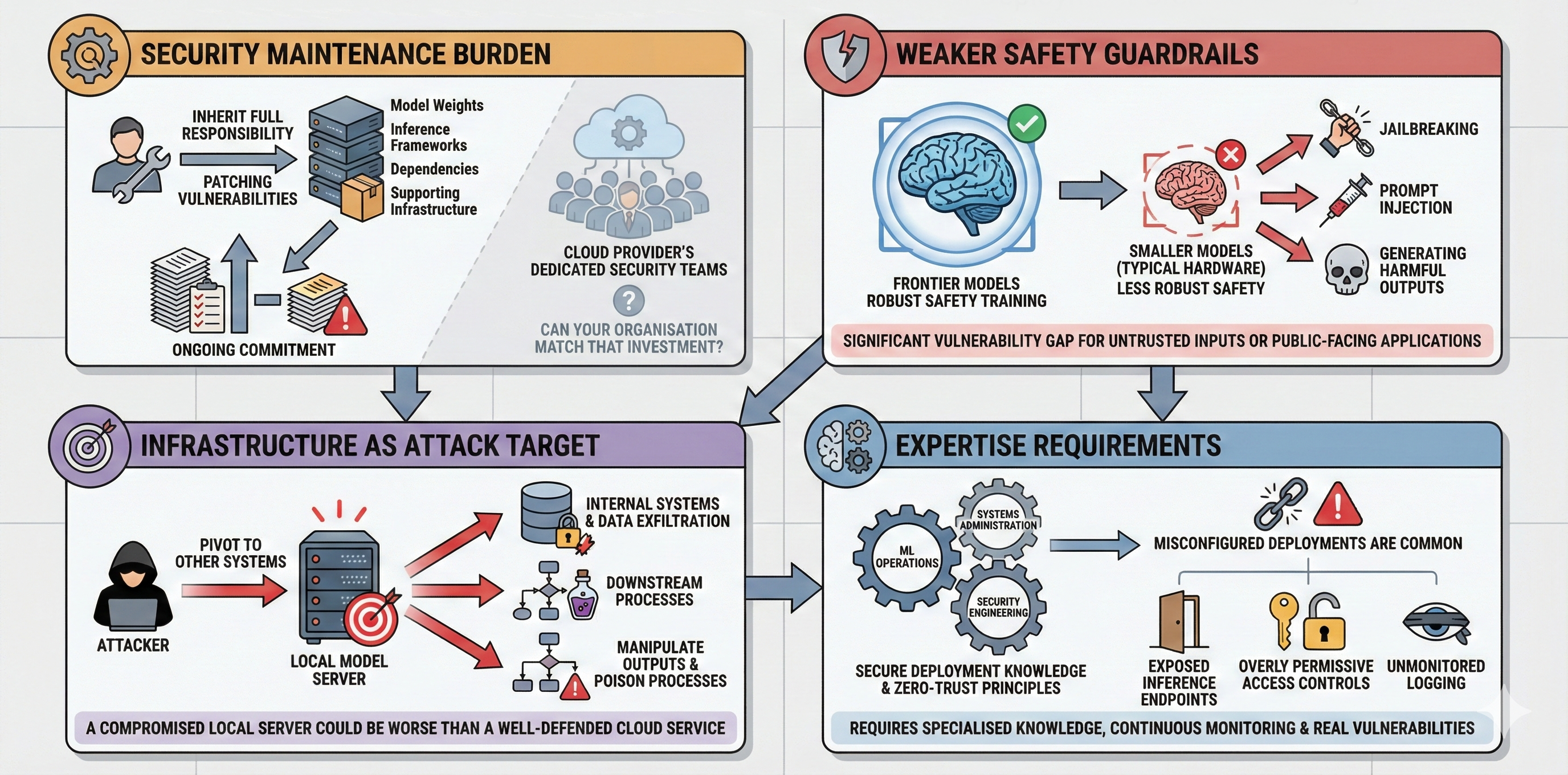

Security Maintenance Burden

You inherit full responsibility for patching vulnerabilities in model weights, inference frameworks, dependencies, and supporting infrastructure. Many organisations underestimate this ongoing commitment. A cloud provider dedicates entire teams to security; can your organisation match that investment for your local deployment?

Weaker Safety Guardrails

Smaller models - the kind practical to run on typical hardware - generally have less robust safety training than frontier models. They're more susceptible to jailbreaking, prompt injection, and generating harmful outputs. If your use case involves untrusted inputs or public-facing applications, this vulnerability gap matters significantly.

Infrastructure as Attack Target

Your local model server becomes a target. A compromised inference server could be worse than using a well-defended cloud service—attackers gaining access to your internal model deployment might pivot to other systems, exfiltrate data, or manipulate outputs in ways that poison downstream processes.

Expertise Requirements

Secure deployment requires specialised knowledge spanning ML operations, systems administration, and security engineering, zero-trust principles, and continuous monitoring. Misconfigured deployments are common and create real vulnerabilities—exposed inference endpoints, overly permissive access controls, and unmonitored logging are recurring issues in the wild.

Making the Right Choice for Your Threat Model

The local versus cloud decision shouldn't be ideological. It should flow from your specific threat model:

-

If your primary concern is data exfiltration to third parties—regulatory requirements, competitive sensitivity, or privacy obligations—local deployment offers genuine advantages.

-

If your concern is sophisticated attacks on your infrastructure—nation-state actors, advanced persistent threats—a well-resourced cloud provider may offer better security than most organisations can achieve independently.

-

If you need air-gapped operation or complete auditability—local is likely your only option, but invest accordingly in security practices.

The Geopolitical Dimension

There's an elephant in the room that many cybersecurity discussions dance around, play down or over dramatise: supply chain trust and national origin of AI systems.

Concerns about Chinese-developed models—whether from companies like Alibaba, Baidu, or open-weight releases like DeepSeek—have entered mainstream security conversations. These concerns typically fall into a few categories:

Legitimate considerations:

- Legal frameworks in some jurisdictions (China's National Intelligence Law, for instance) can compel companies to cooperate with state intelligence activities. This creates genuine uncertainty about data handling, even when providers claim otherwise.

- For government contractors, defence applications, or critical infrastructure, regulatory frameworks may explicitly prohibit certain foreign-developed technologies.

- Supply chain integrity matters. Understanding where your model weights originated, who trained them, and on what data is reasonable due diligence.

Where it gets murkier:

- Open-weight models that you download and run locally don't "phone home" by default—the same network isolation benefits apply regardless of where the model originated.

- The training data and potential biases in any model (domestic or foreign) warrant scrutiny. This isn't unique to Chinese models, if anything, transparency is often lower in closed-source domestic offerings and should be evaluated equally.

- Blanket suspicion based on national origin alone can veer into territory that's more political than technical.

A balanced approach: The practical security question isn't "is this model Chinese?" but rather: "Do I have visibility into this model's behaviour? Can I audit it? Does running it locally eliminate the network-based risks I'm concerned about?"

For highly sensitive applications, erring toward models with clearer provenance and alignment with your regulatory environment makes sense. For general productivity use cases running fully air-gapped, the origin of the weights may matter less than how you've secured the deployment.

This is genuinely contested territory where reasonable security professionals disagree. What matters is making conscious, documented decisions rather than either dismissing concerns entirely or applying blanket restrictions without technical justification.

The Bottom Line

Local language models aren't inherently more or less secure than cloud alternatives. They're differently secure, with distinct strengths and vulnerabilities that align better or worse with specific use cases and threat models.

For organisations handling highly sensitive data under strict regulatory requirements, local models offer compelling strong advantages that may justify the additional operational burden. For others, the security expertise and infrastructure of major cloud providers might actually deliver better outcomes.

The key is making this decision based on your actual risks and capabilities—not on myths about what "local" or "cloud" inherently means for security. Understand your threat model, assess your resources honestly, and choose accordingly.

Related Posts

Why System Prompts will never cut it as Guardrails

Explore the hidden challenges of system prompts in AI and how they can undermine your safety mechanisms.

How to sandbox Claude Code with nono

Claude Code runs with your full user permissions. Every file, credential, and command is accessible. nono uses kernel-level sandboxing to enforce default-deny access with no escape hatch — setup takes 30 seconds.

Why I built nono

I watched the same pattern play out with software supply chains. Now AI agents run with full user permissions and no boundaries. nono is kernel-level sandboxing that makes unauthorised operations structurally impossible.

Want to learn more about Always Further?

Come chat with a founder! Get in touch with us today to explore how we can help you secure your AI agents and infrastructure.